Why Data Normalization is Essential in Cybersecurity: Google SecOps’ Unified Data Model

Discover how data normalization with Google SecOps' UDM boosts threat detection.

Vaibhavi Kadam

Security operations face more than a few challenges on a regular basis. One of the most constant is data chaos.

Picture a scenario where firewall logs in UTC, endpoint alerts using local server time, cloud logs in epoch format, conflicting labels, and scattered user IDs across tools create a barrier to effective threat detection and response. Reconciling these disparate formats manually consumes precious time during critical investigations, giving attackers an advantage.

The solution to this fragmentation is data normalization, which involves transforming data into a unified, consistent format. It bridges the gap between disparate sources, enabling security teams to operate efficiently.

In this post, we explore the reasons why data normalization is essential for effective security operations and how it enables seamless integration, faster analysis, and smarter decision-making. We’ll also showcase how Google SecOps’ Unified Data Model (UDM) can fit into this process.

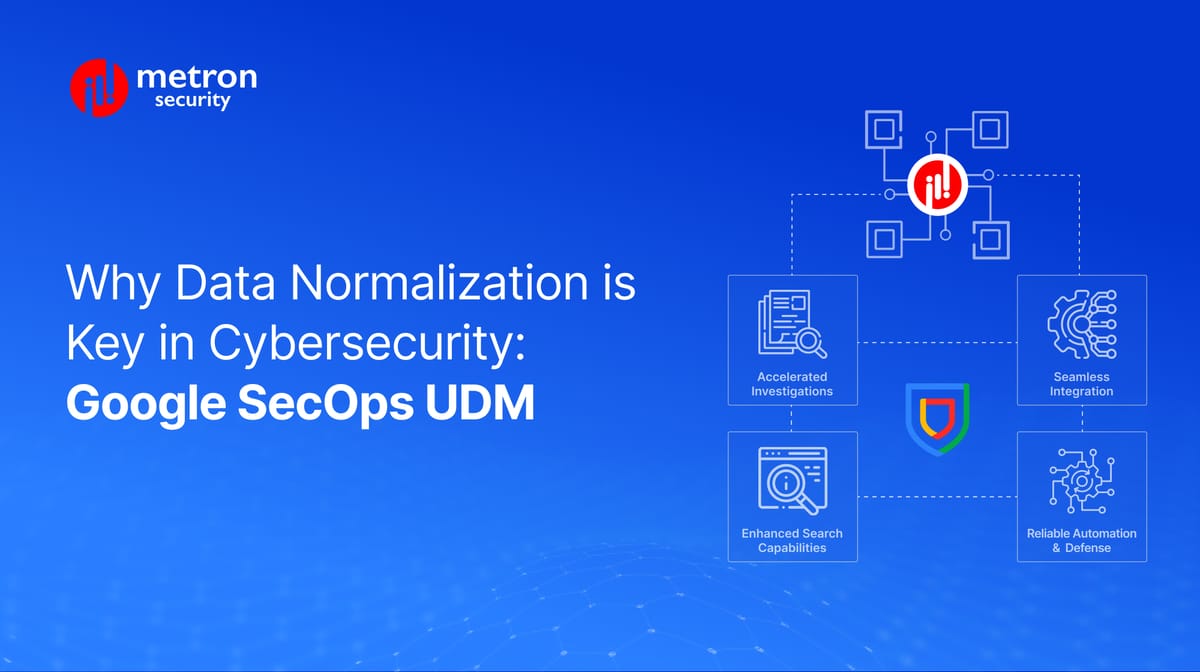

Why Normalization Matters: The Benefits of a Unified Language

Data normalization is an often silent force multiplier in modern cybersecurity operations. Enforcing consistency across disparate datasets comes with plenty of benefits.

Among the key ones:

Accelerated Investigations Through Consistency

Normalization standardizes critical data fields like timestamps, IP addresses, and user identifiers into a unified format. Thanks to this, security teams no longer waste time reconciling issues in critical data fields.

Seamless Integration Across Tools

Interoperability can eliminate barriers between firewalls, EDRs, and cloud platforms by enforcing a unified schema. Security teams can replace brittle custom integrations with seamless cross-platform telemetry, enabling real-time alert correlation, such as connecting suspicious logins flagged by an IAM tool to data exfiltration patterns in seconds.

For example, integrating IoT/OT security platforms with Google SecOps SIEM becomes streamlined when both systems align with a normalized schema. Tools like Bind Plane Agent act as mediators, translating IoT/OT-specific alerts into Google SecOps’ UDM format. This ensures that device-level risks in OT environments, such as unauthorized access, are automatically contextualized alongside IT threats in a single console.

Reliable Automation and Proactive Defense

Structured, normalized data fuels precision-driven automation. Machine learning models can detect anomalies with higher accuracy when they are trained on consistent fields. SOAR playbooks can execute containment workflows without false positives caused by mislabeled data. For example, a rule flagging “PsExec” activity works identically across endpoint and network logs, enabling proactive hunting for lateral movement.

Enhanced Search Capabilities Across Events

Normalization enables effortless filtering of events using common keywords by mapping disparate source keys to unified fields. For instance, if Source A uses "source_ip" and Source B uses "ip_address" for the same IP attribute, normalization remaps both to a common, consistent key. This allows analysts to instantly search all events for a specific IP across every integrated tool.

So, how can one achieve this? Well, out of the multiple tools around, here’s one worth considering.

Google SecOps’ UDM: A Blueprint for Normalization

The benefits we outlined above are not merely theoretical. They can be realized at scale through frameworks such as Google Security Operations’ (GoogleSecOps) Unified Data Model (UDM).

UDM is the architectural blueprint for normalization, tackling data chaos head-on by defining a standardized schema that maps all security-relevant data into consistent fields.

Whether it’s a phishing alert from a SIEM, a compromised endpoint alert, or a suspicious login attempt from IAM, UDM maps all events to consistent fields. This ensures that every tool, whether legacy or modern, operates on the same universal playbook.

To understand how these benefits work in practice, let's examine how UDM operates.

How does UDM work?

Let’s start with an example. A security team receives three alerts from different tools about a potential breach:

- Endpoint Tool: An alert referencing a cryptic endpoint_identifier.

- Identity System: A flagged login attempt from login_client_ip.

- Network Firewall: A log showing traffic to firewall_destination_port.

Manually linking these alerts is a nightmare, as each tool uses different labels for the same entity.

UDM overcomes this problem by transforming fragmented data into a standardized framework through these steps:

- Entity-Centric Normalization

First, UDM maps tool-specific fields to universal entities, eliminating jargon:

- Endpoint tool’s endpoint_identifier → principal.hostname

- Identity system’s login_client_ip → source.ip

- Firewall’s firewall_destination_port → network.target.port

Now, all logs reference the same compromised server with consistent labels, regardless of the tool’s internal terminology.

- Data Enrichment

After this, UDM adds meaning to raw data. For example:

- The source.ip isn’t just an IP—it’s enriched with geolocation, user roles, and historical activity.

- The principal.hostname gains context: Is the server critical? Is it running outdated software?

This turns isolated alerts into a cohesive, prioritized narrative.

- Automated Correlation

Now, with the help of standardized fields, UDM connects the dots:

- The source.ip from the login attempt matches the principal.hostname in the endpoint alert.

- The network.target.port in the firewall log ties to a known malicious pattern.

Result: A unified timeline showing the attacker’s path—from initial access to lateral movement.

While this example demonstrates the transformative power of normalization, operationalizing this approach across complex, multi-tool environments presents unique challenges. Here’s what organizations like yours should know.

Data Normalization Challenges

While frameworks like UDM provide a roadmap, achieving normalization at scale requires expertise. Legacy systems, hybrid environments, and evolving threats add layers of complexity. To address these challenges, organizations must:

- Audit their data ecosystems to identify format discrepancies.

- Prioritize flexible schemas that adapt to new tools and threats.

- Invest in automation to streamline normalization processes.

These steps demand more than just internal effort. They also require collaboration with partners who understand the intricacies of normalization and the urgency of cyber resilience.

Transforming Data into Defense: Metron’s Approach

At Metron, we understand that normalization isn’t just a technical exercise, but that it’s a strategic imperative. Our team specializes in helping organizations unify their security data landscapes, ensuring seamless integration with platforms like Google SecOps. From designing custom normalization pipelines to optimizing UDM adoption, we empower teams to:

- Eliminate data silos and tool sprawl.

- Enhance detection accuracy with enriched, context-aware data.

- Future-proof their infrastructure against emerging threats.

In a world where every second counts, normalized data isn’t a luxury. It’s the backbone of cyber resilience. We’d love to guide you there.

Ready to transform your security operations with a foundation built for speed, scale, and precision. Reach out to us at connect@metronlabs.com to learn how.