How to Enhance Data Analysis with OCSF

A platform-first guide to using the Open Cybersecurity Schema Framework (OCSF).

Shrishthi Dixit

It’s becoming increasingly clear that most security teams don’t lack tools. What they lack is a common language across tools.

All too often, cloud logs, endpoint telemetry, identity events, SaaS audit trails, and vulnerability findings all arrive in different formats, with varying field names, semantics, and levels of context.

That means engineers often spend time stitching data instead of writing detections, analysts spend time translating fields instead of investigating, and leadership receives dashboards that are difficult to compare across environments.

Not great.

Fortunately, frameworks like OCSF help alleviate this issue by providing an open, vendor-neutral schema for security event logging and data normalization, allowing different producers to be mapped into a consistent structure for analysis and threat detection.

Let’s take a closer look.

What is OCSF

OCSF (Open Cybersecurity Schema Framework) is an open standard designed to normalize cybersecurity events and related data, enabling analytics tools to operate on a consistent model. -

At a high level, OCSF gives you:

- Standardized event schema for security events (so “the same type of event” looks the same, regardless of source)

- Extensibility via profiles and extensions for domains and platforms

- Format-agnostic design, so it can be stored as JSON, Parquet, Avro, etc.

- Vendor neutrality, so the schema is not tied to one product’s naming conventions

OCSF building blocks in plain terms

OCSF is structured so you can describe security activity consistently without losing the details you need for investigations:

- Categories: broad groupings (for example: network, system, application-style groupings)

- Event classes: specific event types within a category

- Objects: reusable “entities” that appear across events (like device, user, process, network connection)

- Attributes: standardized field definitions

- Profiles and extensions: optional layers to tailor OCSF to specific environments or platforms -

Practically: you map each source’s raw fields into OCSF fields, then your queries and detections can be written against the normalized model rather than per-source formats. -

Where OCSF fits in modern security stacks

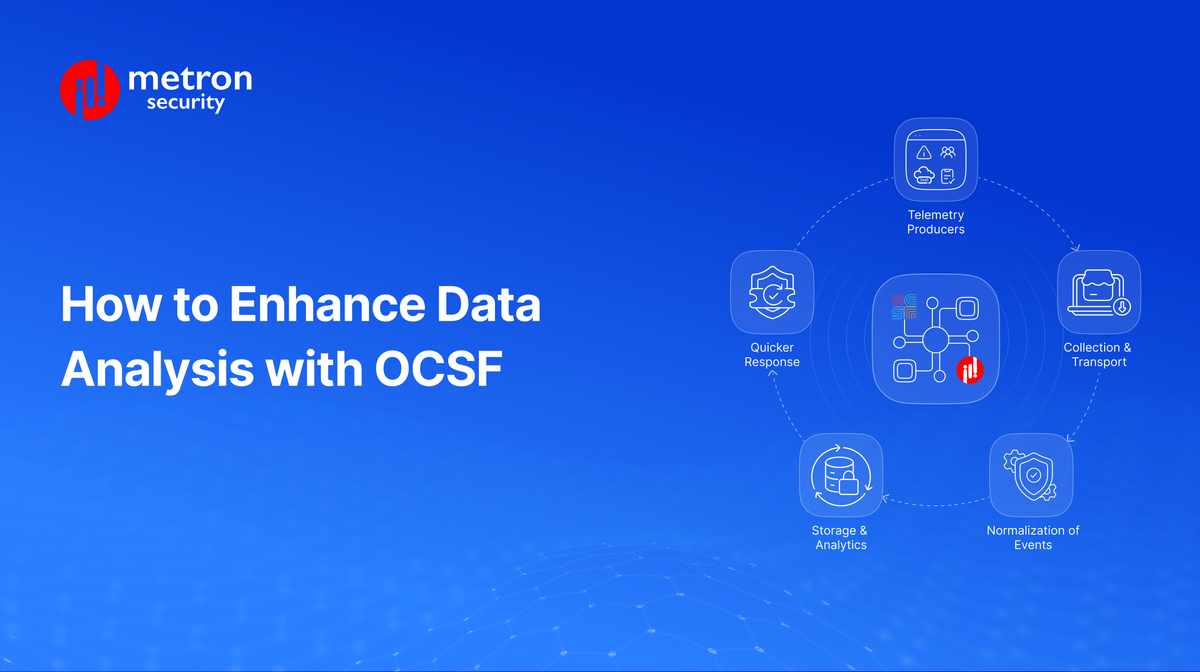

A common pattern looks like this:

- Telemetry producers (cloud services, endpoints, identity providers, SaaS, network controls) emit events in their native formats.

- Collection/transport moves events (streams, buckets, APIs, agents).

- Normalization maps events into OCSF (enrichment + transformation).

- Storage/analytics runs detections and investigations over the normalized dataset.

- Response triggers workflows and case management.

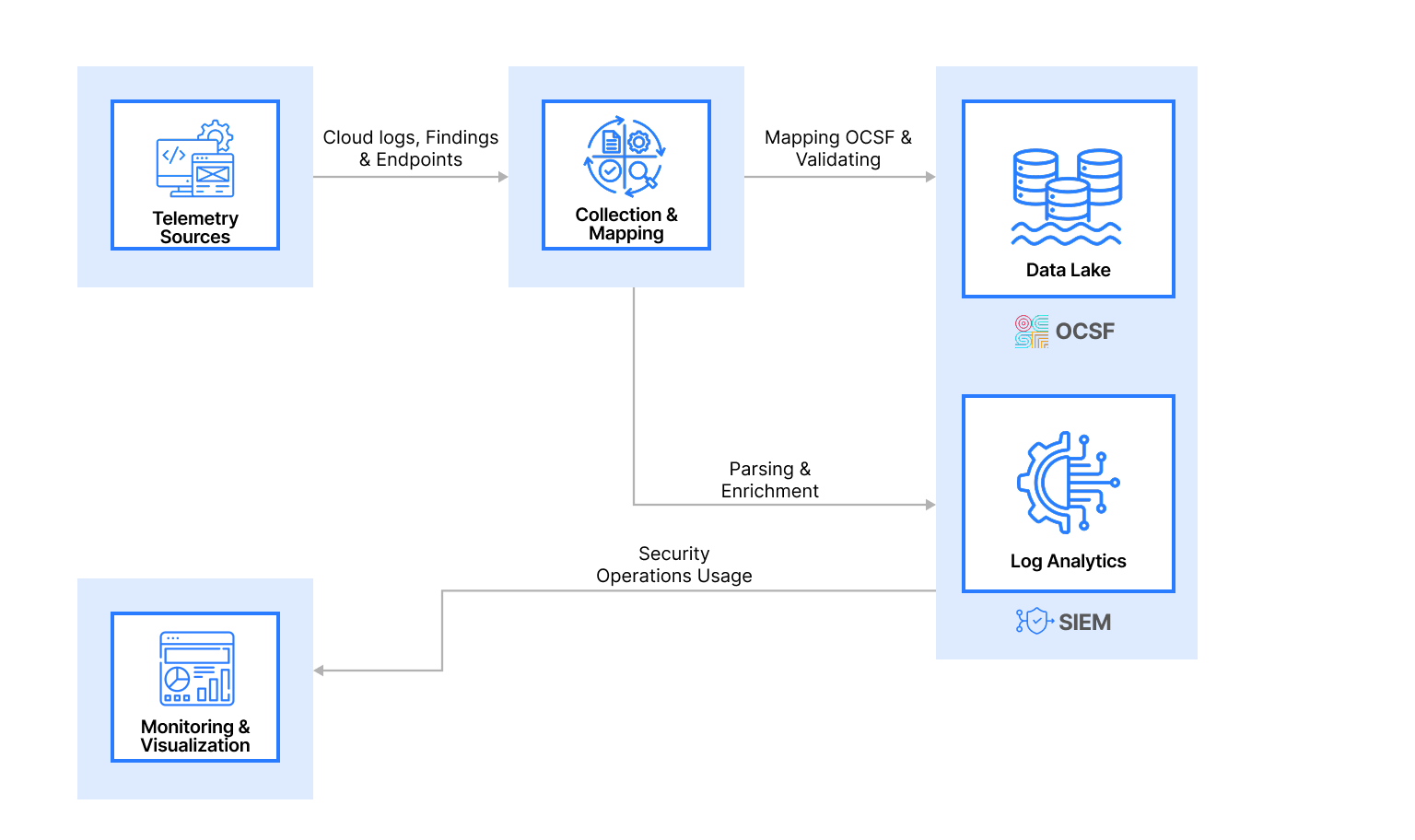

Amazon Security Lake is one example of a platform that standardizes security data using OCSF and stores it in a data lake optimized for analytics.

Architecture diagram

What improves when you normalize to OCSF

1) Faster correlation with fewer “translation steps.”

When the same event type uses the same field model across sources, analysts can pivot across tools without rewriting queries per vendor or per log type. This is one of the central goals of OCSF: a “common language for threat detection and investigation.”

2) More portable detections and reusable analytics

Detections written against a normalized schema are easier to port across environments and data pipelines, because you’re not binding logic to one provider’s field names. OCSF is explicitly designed to enable consistent representation across tools and platforms.

3) Cleaner data engineering and more predictable pipelines

OCSF is intended to help data engineers map differing schemas to simplify ingestion and normalization, while keeping the schema extensible for domain needs.

Engineer-first view: what “mapping to OCSF” looks like

A practical mapping pipeline typically includes:

- Source identification: Decide which producers matter most (identity, endpoints, cloud control-plane logs, SaaS audit logs).

- Class selection: Pick the OCSF event classes that represent those producers.

- Field mapping: Map native fields to OCSF attributes and objects (keeping originals when needed for traceability).

- Enrichment: Add business context (owner, environment, application, asset criticality).

- Validation and governance: Enforce schema checks and track versioning as sources change.

If you’re building custom ingestion into an OCSF-based lake: ingest raw logs, transform to OCSF, and store them in a standardized format (with examples and reference pipelines). -

Platform-first view: making OCSF real in production

If you want OCSF to actually improve investigations (not just look nice on a diagram), the focus needs to be on how raw telemetry is transformed into reliable, analysis-ready data, regardless of its source.

At a high level, OCSF-based pipelines follow a consistent pattern:

- Ingest raw logs and findings from source systems

- Enrich events with asset, identity, and environment context

- Map source-specific fields into OCSF classes, categories, and objects

- Validate schema conformance, handle versioning, and prevent drift

This pattern applies equally to cloud-native pipelines, SaaS integrations, on-prem sources, and custom-built collectors.

Data modeling choices that keep detections stable

- Choose a “core OCSF subset” first (your top event classes and required fields).

- Add extensions intentionally (don’t extend on day 1 unless you know who will maintain it).

- Track OCSF versioning for your pipeline, so updates don’t silently break analytics. -

Treat normalization as a product, not a one-time migration

- Build a clear owner model: who maintains mappings, who approves schema changes, who owns enrichment sources.

- Add health checks: ingestion volume, mapping failure rate, dropped events, invalid records, and schema drift.

Where this helps most (common use cases)

- Cross-tool investigations: Pivot from an alert to identity, endpoint, and cloud activity using a consistent event model.

- Threat hunting at scale: Standardized fields enable reusable hunting queries across environments and accounts. ()

- Unified reporting: KPIs become comparable when “the same thing” is represented consistently.

- Multi-cloud security analytics: Normalize across AWS, Azure, and GCP control planes, rather than maintaining parallel query stacks.

Bottom line

OCSF is valuable because it turns security data normalization into a shared contract: producers can emit or be mapped into a standard model, and detection teams can build analytics on a consistent foundation. The framework is explicitly vendor-neutral, extensible, and designed to support consistent representation of security events across tools and platforms.

If you’re evaluating OCSF adoption, the fastest path is usually platform-first: start with your highest-value telemetry sources, map a small but stable subset of event classes, and prove the investigation and detection wins before expanding to everything.

Metron can help you design the OCSF data model, implement normalization pipelines, and operationalize schema governance so it stays reliable as your sources evolve.

Drop us a note: connect@metronlabs.com